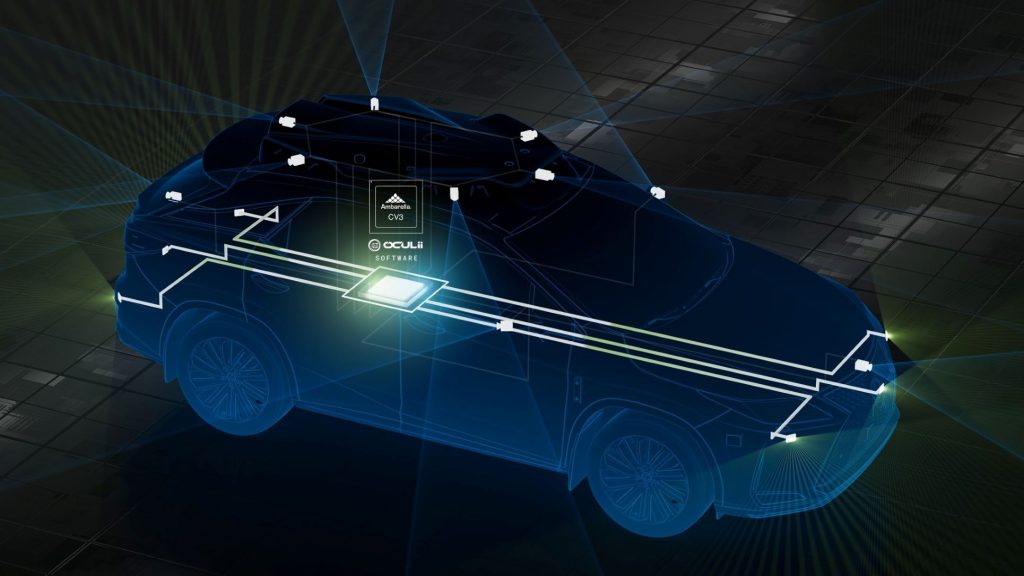

Ambarella Inc., the Santa Clara, CA-based edge AI semiconductor company, earlier this week announced what it says in the world’s first centralized 4D imaging radar architecture that allows both central processing of raw radar data and deep, low-level fusion with other sensor inputs including cameras, lidars, and ultrasonics. The breakthrough architecture provides greater environmental perception and safer path planning in AI-based SAE Level 2+ ADASs (advanced driving assistance systems) to Level 5 autonomous driving systems as well as autonomous robotics.

It features radar technology from Ambarella’s recent Oculii acquisition including what is said to be the only AI software algorithms that dynamically adapt radar waveforms to the surrounding environment—providing high angular resolution of 0.5 degrees, an ultra-dense point cloud up to 10s of thousands of points per frame, and a long detection range up to 500+ m. All of this is achieved with an order of magnitude fewer MIMO (multiple input multiple output) antenna channels, which reduces the data bandwidth and achieves significantly lower power consumption than competing 4D imaging radars.

To create the cost-effective new architecture, Ambarella optimized the Oculii algorithms for its CV3 AI domain controller SoC family and added specific radar signal-processing acceleration. The CV3’s industry-leading AI performance per watt offers the high compute and memory capacity needed to achieve high radar density, range, and sensitivity. Additionally, a single CV3 can efficiently provide high-performance, real-time processing for perception, low-level sensor fusion, and path planning, centrally and simultaneously, within autonomous vehicles and robots.

“No other semiconductor and software company has advanced in-house capabilities for both radar and camera technologies as well as AI processing,” said Fermi Wang, President and CEO of Ambarella. “This expertise allowed us to create an unprecedented, centralized architecture that combines our unique Oculii radar algorithms with the CV3’s industry-leading domain control performance per watt to efficiently enable new levels of AI perception, sensor fusion, and path planning that will help realize the full potential of ADAS, autonomous driving, and robotics.”

The radar status quo

In the lead-up to the announcement, Steven Hong, VP and General Manager of Radar Technology, at Ambarella, provided some insight exclusively to Inside Autonomous Vehicles on his company’s centralized 4D imaging radar architecture breakthrough. Hong is the former CEO & Co-founder of AI radar perception software developer Oculii, which was acquired by Ambarella last November.

He says that the perception systems for autonomous vehicles like self-driving cars and robots have to be extremely high-performance, cost-effective, and power efficient. It’s the combination of Oculii software with Ambarella’s industry-leading silicon that enabled the new class of radar that he and other company execs think is going to be very disruptive for the industry.

“Efficiency and optimization of power and performance are really important, and this is something that we think we have a solution to really address,” he said.

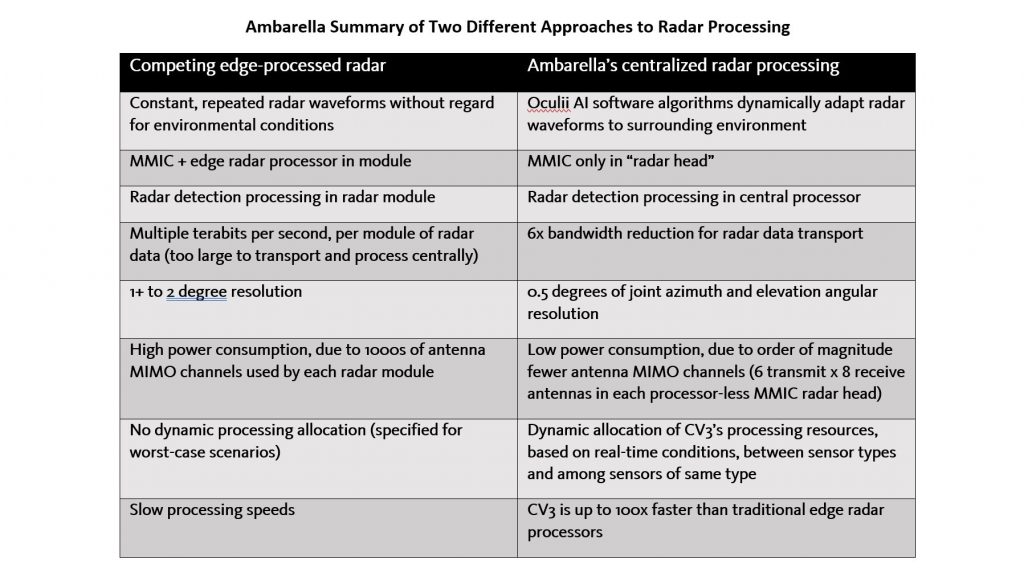

As Hong explained, traditionally radar sensor data has been generated with antennas inside a module and processed at the edge inside of that module, and then the output of that data is typically shipped somewhere else. Camera sensor data are processed differently, typically streamed somewhere else where it’s aggregated and then processed with a high-performance processor running at much higher speeds with more memory. The centralized nature has a lot of advantages because resources can be shifted around, and the sensor is “protected” from the expensive brains, he said.

This is not traditionally done with radar because the amount of data typically generated is too large to centralize without too much latency. That’s the case because, to get high enough resolution for really high performance, a system needs more antennas, which equates to more data, effectively higher cost of transport, and more latency to move the data.

For an imaging radar, hundreds if not thousands of antennas are needed to get about 1-2 degrees of spatial elevation and azimuth resolution, said Hong. All those antennas lead to tens of terabytes worth of data, which effectively makes it not cost-effective or efficient to transport. The data set issues of competing 4D imaging radar technologies are multiplied for the six or more radar modules required for autonomous vehicles.

Rather than moving that amount of data, companies have put the processor inside the module. In a negative self-reinforcing cycle, higher resolution means more antennas, greater processing, and bigger modules.

In addition, radar systems with thousands of antennas can consume 30-50 W of power, said Hong. When a customer is trying to deploy a sensor behind a bumper, windshield, or another location with not much airflow, it is hard to justify the power budget to dissipate the heat.

“This becomes really the bottleneck of how much compute and how much resolution you can achieve with one of these traditional designs,” said Hong. “This is really the challenge of radar and, in many ways, why radar has lagged behind camera and lidar when it comes to performance and resolution.”

Different approach

Oculii technology reduces the antenna array to 6 transmit x 8 receive for each processor-less MMIC (monolithic microwave integrated circuit) radar head in the new architecture. This is done by applying AI software to dynamically adapt the radar waveforms generated with existing MMIC devices and using AI “sparsification” to create virtual antennas. The number of MMICs is drastically reduced while achieving an extremely high 0.5 degrees of joint azimuth and elevation angular resolution. Additionally, Ambarella’s centralized architecture consumes significantly less power, at the maximum duty cycle, and reduces the bandwidth for data transport by six times, while eliminating the need for pre-filtered, edge processing and its resulting loss in sensor information.

The software-defined centralized architecture also enables dynamic allocation of the CV3’s processing resources based on real-time conditions, both between sensor types and among sensors of the same type. For example, in extremely rainy conditions that diminish long-range camera data, the CV3 can shift some of its resources to improve radar inputs. Likewise, if it is raining while driving on a highway, the CV3 can focus on data coming from front-facing radar sensors to further extend the vehicle’s detection range while providing faster reaction times.

This can’t be done with an edge-based architecture, where the radar data are being processed at each module, and where processing capacity is specified for worst-case scenarios and often goes underutilized. These two approaches to radar processing are summarized in the table.

CV3 marks the debut of Ambarella’s next-generation CVflow architecture with a neural vector and general vector processor—both designed by Ambarella to include radar-specific signal processing enhancements. They work in tandem to run the Oculii advanced radar perception software with far higher performance including speeds up to 100 times faster than traditional edge radar processors can achieve.

The new centralized architecture has easier OTA (over-the-air) software updates for continuous improvement and future-proofing. Instead of each edge radar module’s processor having to be updated individually after determining the processor and OS being used in each, a single OTA update can be pushed to the CV3 SoC and aggregated across all system radar heads.

The radar heads eliminate the need for a processor, which reduces costs for both the upfront bill of materials and damage in a crash. (Most radars are located behind the vehicle’s bumper). By the way, many of the edge-processor radar modules already deployed never receive software updates because of the software complexity.

The benefits of data centralization

Oculii’s perception technology enables extremely high resolution with fewer antennas “in a way that’s very elegant and becomes supercharged with Ambarella’s architecture,” said Hong of the concept that leverages “sparsity. We use several orders of magnitude fewer antennas, and this allows us to have much less data to transport. So rather than terabytes, we’re in the gigabits worth of data per second.”

However, without all that information, the technology does not effectively fill the entire array. It compensates for this by using the intelligent, adaptive waveform that learns from the environment and sends different information at different times, with compute calculating all of the missing information.

“This happens in a way where, the more compute that we have, and the faster we can do these calculations, the more sparsity we can enable,” explained Hong. “This is really a virtuous cycle. As the computing infrastructure becomes more powerful, the radar head actually can become cheaper, smaller, lighter, and lower power.”

Ambarella execs believe that moving all of the radar data into a central processor with vision gives its solution a real competitive advantage.

“You have camera companies and…radar companies, but no real company that does both radar and camera processing at a high level for these AV systems, and Ambarella, we believe, is really the first,” said Hong. “By combining radar and camera data on the same processing fabric, we’re able to do very low-level sensor fusion, which for the first time opens up what you can do with the AI models.”

It makes them more efficient and more powerful.

“Because the two modalities are connected to the same fabric, you also can shift around where you’re allocating the resources depending on what’s happening…,” added Hong. “That’s really unique and not doable today.”

For instance, on the highway at high speeds, the radar needs to detect far in front, but in a parking lot, it needs to see 360 degrees around.

“Having a centralized radar architecture allows you to shift that processing around in a way where it’s still cost-effective because you’re not prefixing for the worst-case scenario that you need to handle,” said Hong. “And it allows you to dynamically allocate all this processing to where it needs to be.”

The new architecture allows Ambarella to provide many benefits for its customers.

“On the radar side, it allows us to get several orders of magnitude performance improvement over what’s traditionally been available,” said Hong. “That means longer range, higher resolution, much more density, higher sensitivity, and multiple radars processed at the same time. And the beautiful part is we do all of this while making the radar sensor itself cheaper, smaller, lower power, and thinner.”

Its radar module is the size of a postcard, is thin and lightweight, and has less than 5 W of power consumption. However, the sensor can achieve very high angular resolution—less than half a degree of angular resolution between elevation and azimuth. It has extremely high density, with tens of thousands of points per frame running at 20-30 frames per second—close to half a million points per second.

The new centralized architecture will be demonstrated at an Ambarella invitation-only event taking place during CES 2023. For sampling and evaluation information on the Oculii AI radar technology and CV3 AI domain controller SoC family, contact Ambarella.