A team of researchers at the Massachusetts Institute of Technology (MIT) has developed a self-driving system that uses machine learning to avoid computationally intensive tuning of LiDAR-generated point clouds. Their new end-to-end framework can navigate autonomously using only raw 3D point cloud data and low-resolution GPS maps.

3D data output by LiDAR sensor is extremely large immense and computationally intensive; it can be on the order of more than 2 million points per second. This amount of data often has to be collapsed into a 2D version for navigation, losing significant information in the process.

The MIT researchers designed new deep learning components to computer hardware more efficiently in order to control the vehicle in real-time. The system can estimate how certain it is about any given prediction, and can therefore give more or less weight to that prediction in making its decisions. This hybrid evidential fusion can adapt to unexpected events.

The team will next address more complex events such as adverse weather conditions and dynamic interaction with other vehicles. Their paper, “Efficient and Robust LiDAR-Based End-to-End Navigation,” was presented in May at the International Conference on Robotics and Automation (ICRA). Authors are Ph.D. students Zhijian Liu, Alexander Amini, Sibo Zhu and Sertac Karaman and professors Song Han and Daniela Rus.

From the paper’s abstract:

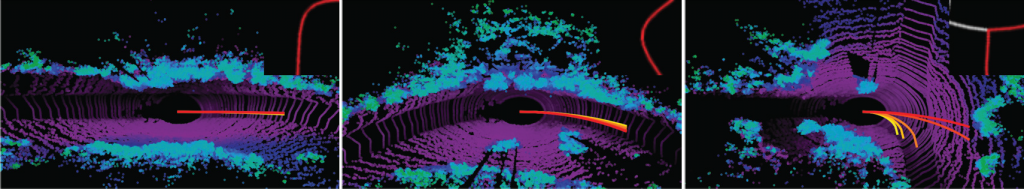

“Deep learning has been used to demonstrate end-to-end neural network learning for autonomous vehicle control from raw sensory input. While LiDAR sensors provide reliably accurate information, existing end-to-end driving solutions are mainly based on cameras since processing 3D data requires a large memory footprint and computation cost. On the other hand, increasing the robustness of these systems is also critical; however, even estimating the model’s uncertainty is very challenging due to the cost of sampling-based methods. In this paper, we present an efficient and robust LiDAR-based end-to-end navigation framework. We first introduce Fast-LiDARNet that is based on sparse convolution kernel optimization and hardware-aware model design. We then propose Hybrid Evidential Fusion that directly estimates the uncertainty of the prediction from only a single forward pass and then fuses the control predictions intelligently. We evaluate our system on a full-scale vehicle and demonstrate lane-stable as well as navigation capabilities. In the presence of out-of-distribution events (e.g., sensor failures), our system significantly improves robustness and reduces the number of takeovers in the real world.

Image of augmented data samples, above, excerpted from the paper.