Roboticists now have a new tool that allows them to train their robots via realistic simulations, where they can interact with environments that go beyond what’s possible in the real world.

That tool is the Isaac simulation engine from NVIDIA, or Isaac Sim, with Omniverse used as the underlying foundation for the simulators, according to a blog post written by Gerard Andrews, a senior product marketing manager for NVIDIA.

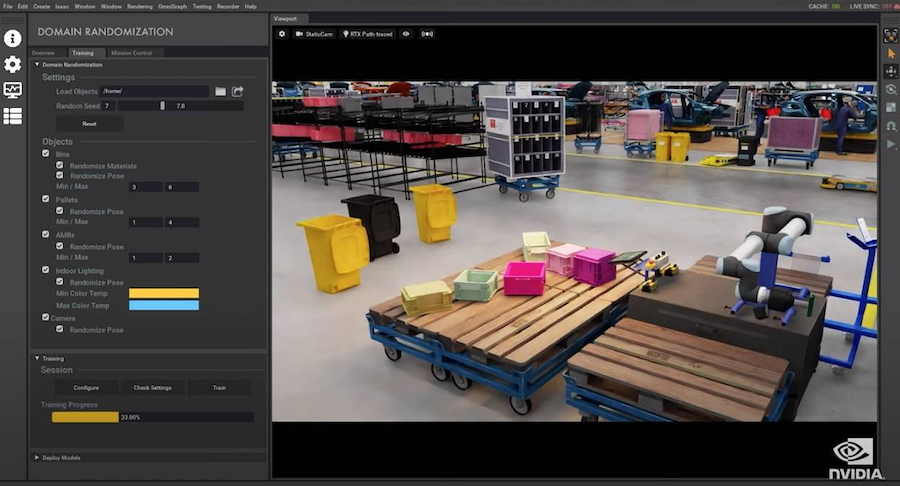

Now available in open beta, the engine is designed to create enhanced photorealistic environments while also streamlining synthetic data generation and domain randomization to build ground-truth datasets. These datasets can then be used to train robots in a variety of applications, including logistics, warehouses and factories of the future.

Isaac Sim also adds improved multi-camera support and sensor capabilities, and a PTC OnShape CAD importer to make it easier to bring in 3D assets. These features expand the breadth of robots and environments that can be modeled and deployed. This includes design and development of the physical robot to training the robot to deploying the robot in a digital twin, where it’s simulated and tested in an accurate and photorealistic virtual environment.

To deliver realistic robotics simulations, Isaac Sim leverages the Omniverse GPU-enabled physics simulation with PhysX 5, photorealism with real-time ray and path tracing, and Material Definition Language (MDL) support for physically based rendering. It addresses common use cases including manipulation, autonomous navigation and synthetic data generation for training data. The modular design allows users to customize and extend the toolset for many applications and environments.

Omniverse Nucleus and Omniverse Connectors enable collaborative building, sharing and importing of environments and robot models in Universal Scene Description (USD). The robot’s brain can easily be connected to a virtual world through the Isaac SDK and ROS/ROS2 interface, fully-featured Python scripting, plugins for importing robot and environment models.

It also features Synthetic Data Generation, a tool used to train robot perception models. Isaac Sim has built-in support for a variety of sensor types for these models including RGB, depth, bounding boxes and segmentation.

“In the open beta, we have the ability to output synthetic data in the KITTI format,” according to the blog post. “This data can then be used directly with the NVIDIA Transfer Learning Toolkit to enhance model performance with use case-specific data.”

Domain Randomization is another key feature, varying the parameters that define a simulated scene, such as lighting, color and texture. One of the main objectives is to enhance machine learning (ML) model training by exposing the neural network to a wide variety of domain parameters in simulation. This helps the model generalize well when it encounters real world scenarios, helping teach models what to ignore.

Isaac Sim supports the randomization of many different attributes that help define a given scene. With these capabilities, ML engineers can ensure the synthetic dataset contains sufficient diversity for robust model performance.

“In Isaac Sim open beta, we have enhanced the domain randomization capabilities by allowing the user to define a region for randomization,” according to the post. “Developers can now draw a box around the region in the scene that is to be randomized and the rest of the scene will remain static.”

Thousands of developers have worked with Isaac Sim through the company’s early access program.