At the AutoSens event this week in Brussels, Algolux Inc. announced an extension of its Eos perception software portfolio to deliver both industry-leading dense depth estimation and robust perception to further increase the safety of passenger and transport vehicles in a broad range of lighting and weather conditions.

Algolux was founded in 2014 by CEO Allan Benchetrit and CTO Felix Heide as a computer vision company addressing the critical issue of safety for advanced driver assistance systems and autonomous vehicles. Headquartered in Montreal, with offices in Palo Alto and Munich, the company was built on groundbreaking research at the intersection of deep learning, computer vision, and computational imaging.

The innovative artificial intelligence startup’s new depth-perception-software offering is said to address the cost and performance limitations in today’s ADAS (advanced driver assistance system) and AV (autonomous vehicle) platforms by applying the same deep-learning approach used by Algolux’s other perception software.

It was previewed for Inside Autonomous Vehicles by Dave Tokic, VP of Marketing & Strategic Partnerships at Algolux, at last week’s ADAS & Autonomous Vehicle Technology Expo and Conference in San Jose, CA.

“What we’re doing is extending that portfolio to include very dense depth estimation,” said Tokic. This involves “being able to determine whether an object—a pedestrian or vehicle—is 50, 200, 600 m out, to basically overcome the limitations today with lidar- and radar-based systems.”

The ability to estimate the distance of an object is a fundamental capability for ADAS and AV systems. It allows the vehicle to understand where things are in its surroundings to know when to perform a lane change, help park a car, issue a warning to the driver, or automatically brake in emergency situations such as for debris on the road. This is accomplished today by various types of sensors, such as lidar, radar, and stereo or mono cameras, and edge software that interprets the sensor information to determine the distance to important objects, features, or obstacles in front and around the vehicle.

According to Algolux, each of the current approaches has limitations that hamper safe operation in all conditions. The company says that lidar has a limited effective range of up to 200 m (656 ft) due to decreasing point density the further away an object is, resulting in poor object-detection capabilities, and low robustness in harsh weather conditions such as fog or rain due to backscatter of the laser. It is also costly, currently in the hundreds to thousands of dollars per sensor. Radar has good range and robustness, but poor resolution limits its ability to detect and classify objects. Stereo camera approaches can do a good job of object detection but are hard to keep calibrated and have low robustness. In addition, mono/single camera solutions have many issues resulting in poor depth estimation.

Algolux says it has proven its Eos depth-perception performance in OEM and Tier 1 engagements involving both trucking and automotive applications in North America and Europe. One of those customers is Mercedes-Benz.

“Mercedes-Benz has a track record as an industry leader delivering ADAS and autonomous driving systems, so we intimately understand the need to further improve the perception capabilities of these systems to enable safer operation of vehicles in all operating conditions,” said Werner Ritter, Manager, Vision Enhancement Technology Environment Perception, Mercedes-Benz AG. “Algolux has leveraged the novel AI technology in their camera-based Eos Robust Depth Perception Software to deliver dense depth and detection that provide next-generation range and robustness while addressing limitations of current lidar- and radar-based approaches for ADAS and autonomous driving.”

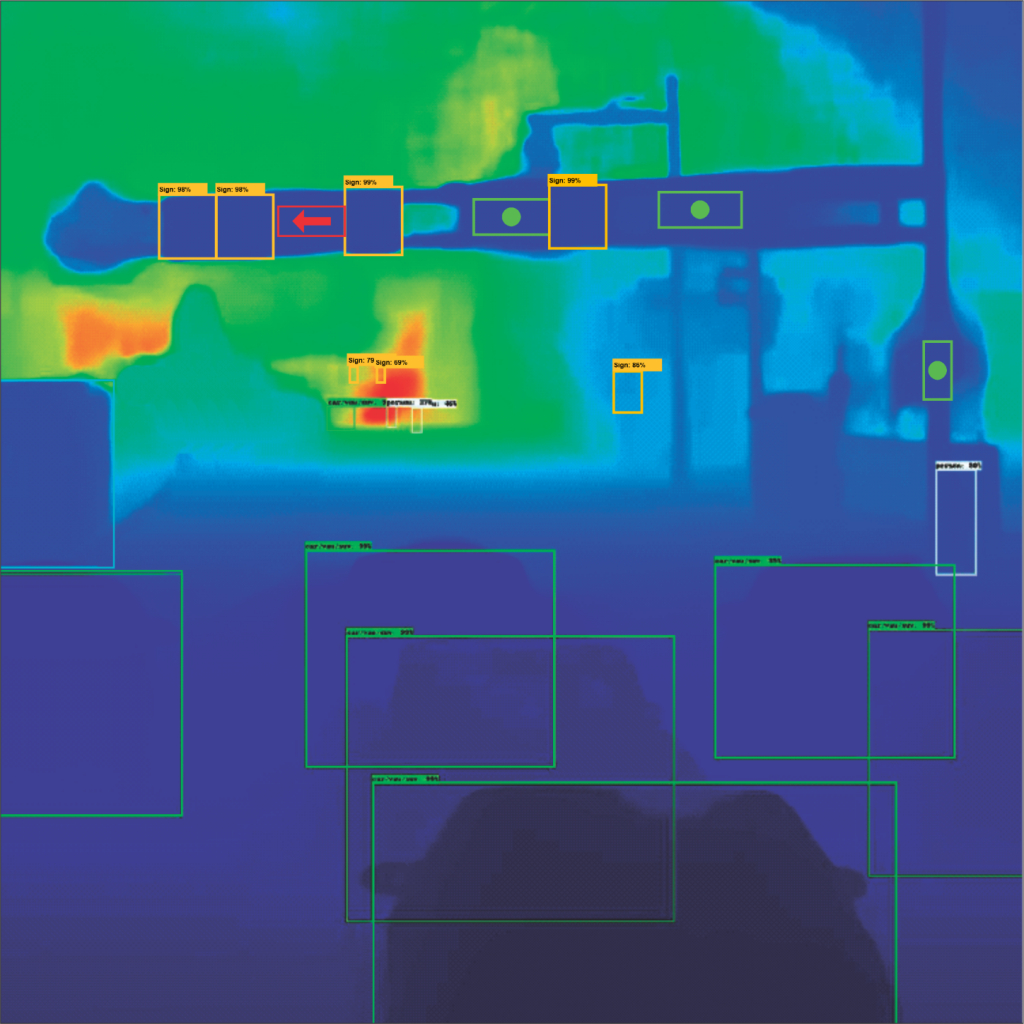

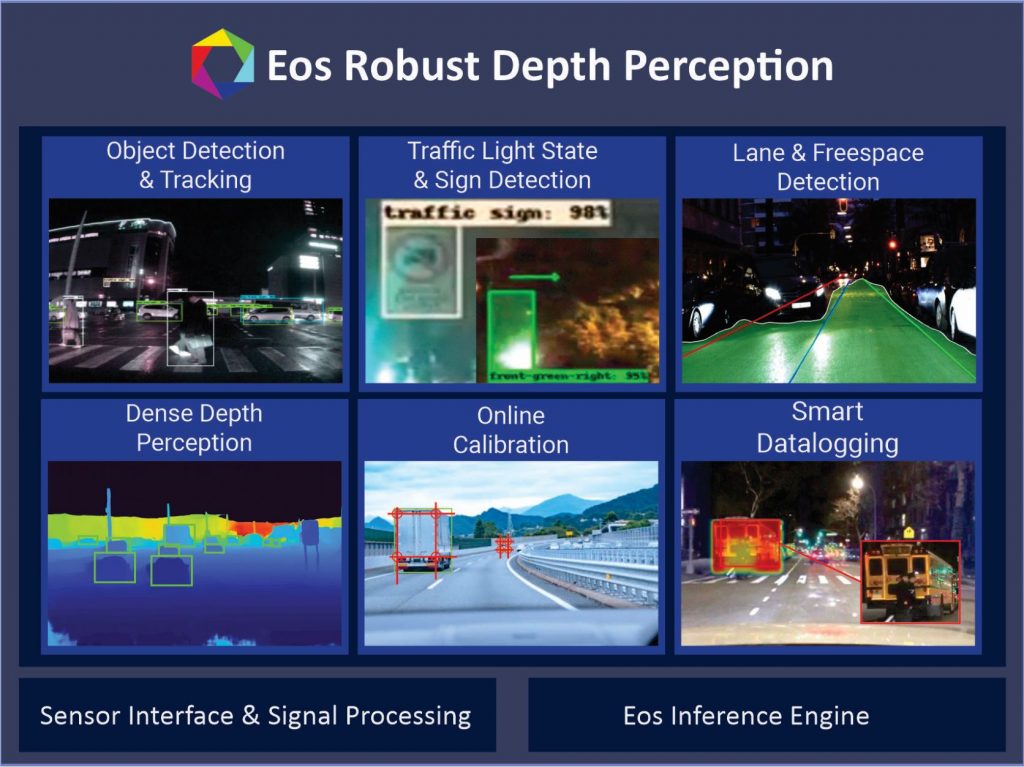

Eos depth-perception software addresses these limitations by providing dense depth together with accurate perception capabilities to determine distance and elevation out to long distances of up to 1 km (0.62 mi) to identify pedestrians, bicyclists, and even lost cargo or other hazardous road debris. Its modular capabilities are designed to provide rich 3D scene reconstruction for a highly capable and cost-effective alternative to lidar, radar, and stereo camera approaches.

The new software accomplishes this with a multi-camera approach supporting a wide baseline between the cameras—even beyond 2 m (6.6 ft), which is especially useful for long-range applications such as trucking. It supports up to 8-MP automotive camera sensors and any field of view for forward, rear, and surround configurations. Real-time adaptive calibration addresses vibration and movement between the cameras or misalignments while driving, historically a key challenge for wide-baseline configurations. It comes with an efficient embedded implementation of Algolux’s end-to-end deep-learning architecture for both depth estimation and perception.