New research might one day prevent the AI systems in autonomous vehicles from getting confused by imperfect data from their sensors.

In a perfect world, where the cameras and other sensors on an autonomous vehicle (AV) were completely trustworthy, all its AI system would have to do when it detected an obstacle would be to perform the proper action, such as steering to one side, to avoid a collision.

But what if there were a glitch in the cameras that slightly shifted an image by a few pixels? If the AV blindly trusted such problematic data, or “adversarial inputs,” it might take unnecessary and potentially dangerous actions.

“You often think of an adversary being someone who’s hacking your computer, but it could also just be that your sensors are not great, or your measurements aren’t perfect, which is often the case,” study lead author Michael Everett at MIT said in a statement.

Previous research sought a number of solutions for adversarial inputs. For example, scientists often train AIs to identify objects such as vehicles by showing them hundreds of properly labeled images of such items. To help them overcome any problems with sensors in this supervised form of learning, researchers may also test AIs with many slightly altered versions of these images, and if they still correctly recognize them, they will likely prove robust against adversarial inputs.

However, analyzing every possible image alteration is computationally expensive. This makes it difficult to apply successfully in time-sensitive tasks such as collision avoidance.

Instead, scientists at MIT, funded in part by Ford, pursued reinforcement learning, another form of machine learning that does not require associating labeled inputs with output activity. Instead, it aims to reinforce certain actions in response to certain inputs, based on a resulting reward. This strategy is often used to train computers to play and win game such as chess.

Previous work mostly used reinforcement learning in situations where the AI regarded the input data as true. The MIT researchers say they are the first to bring “certifiable robustness” to uncertain inputs in reinforcement learning.

Their new system, known as Certified Adversarial Robustness for deep Reinforcement Learning (CARRL) takes an input, such as an image with a single dot, and estimates what effects an adversarial influence might have, such as a region around the dot where it might actually be instead. The AI then seeks to find the action that would result in the most optimal worst-case reward given every possible position of the dot within this region.

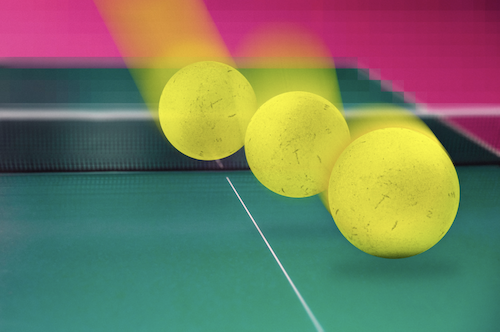

In tests with the video game Pong, in which two players operate paddles on either side of a screen to pass a ball back and forth, the scientists introduced an “adversary” that pulled the ball slightly further down than it actually was. They discovered CARRL won more games than standard techniques as the adversary’s influence grew.

“If we know that a measurement shouldn’t be trusted exactly, and the ball could be anywhere within a certain region, then our approach tells the computer that it should put the paddle in the middle of that region, to make sure we hit the ball even in the worst-case deviation,” Everett said.

The method proved similarly robust in experiments testing collision avoidance, where the researchers simulated a blue and an orange vehicle attempting to switch positions without colliding. As the scientists tampered with the orange vehicle’s data regarding the blue vehicle’s position, CARRL steered the orange vehicle around the other vehicle, taking a wider berth as the adversarial influence grew stronger and the blue vehicle’s position became more uncertain.

These findings may not apply just to imperfect sensors, but also to unpredictable interactions in the real world.

“People can be adversarial, like getting in front of a robot to block its sensors, or interacting with them, not necessarily with the best intentions,” Everett said in a statement. “How can a robot think of all the things people might try to do, and try to avoid them? What sort of adversarial models do we want to defend against? That’s something we’re thinking about how to do.”

The scientists detailed their findings online February 15 in the journal IEEE‘s Transactions on Neural Networks and Learning Systems.