The DARPA Robotics Challenge has passed and the winning teams from South Korea and the U.S. have long since returned home, having split some $3.5 million in prizes for their disaster-response robots.

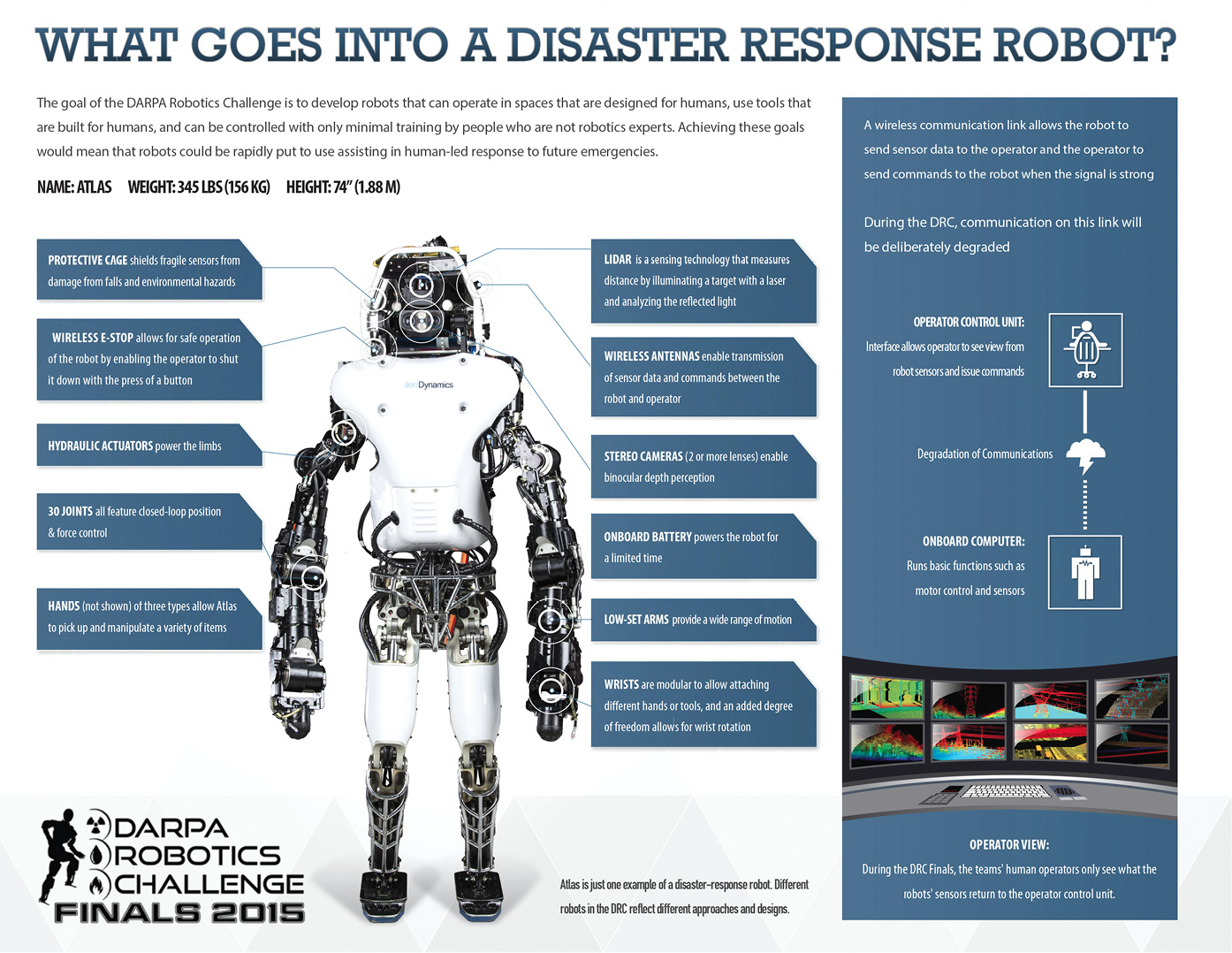

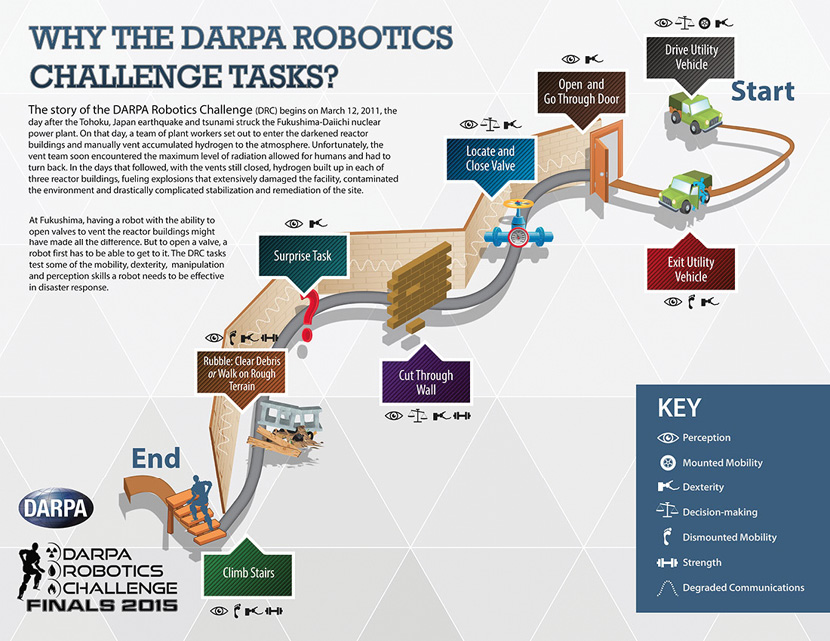

Twenty-three teams sent robots through an obstacle course that required the machines to drive, successfully park and exit a car, open a door, turn a valve, cut a hole in a wall, plug a cord into an outlet, and traverse rubble and stairs—no small tasks even for advanced robots in 2015. Only three teams’ robots completed all eight tasks in the one-hour window and more than a handful failed to complete any of the tasks. Videos of some less-than-agile Challenge robots falling or walking into doorframes went viral around the world.

But DARPA’s goal of “accelerat[ing] progress in robotics and hasten[ing] the day when robots have sufficient dexterity and robustness to enter areas too dangerous for humans” was roundly achieved, even if the machines are—for now—a little more Charlie Chaplin than Terminator.

Here are some lessons learned during the Challenge that should help propel robotics toward their already promising future.

Think Like a Human

The DRC-HUBO robot fielded by a University of Las Vegas team took eighth place, but team lead Paul Oh is still pretty jazzed.

“We were the fastest team driving from start to end,” he said. “I wanted to show that we could be either close to human or better than human in driving,” and in fact UNLV’s DRC-HUBO managed the 450-foot obstacle course in less than a minute, about as fast as a human student could do with practice.

How did UNLV get the robot to drive so well? They talked to a driving teacher.

“It so happens that one of our teammates was a driving instructor,” Oh said. “He said…a common mistake that first-time drivers do [is] they’re very choppy in their motions.” Oh compared a novice human driver to the way a typical robot drives: by constantly checking and rechecking its position relative to obstacles. On the other hand, “experienced drivers know we go with the flow of traffic. We don’t calculate our position, we regulate our velocity.”

Humans figure this out through practice. Putting the same principle to work on UNLV’s robot meant building a system similar to the back-up cameras available on many modern cars, only pointing forward. “So the user is always knowing the vector at which they’re going to be turning to.”

Though self-driving and driverless cars are making huge strides, Oh still sees a use case for a robot that can drive a car.

“There are times when you just want a robot to take over control of a vehicle,” Oh explained. “You don’t want to have to modify the vehicle, you just want to upload into HUBO and say ‘That’s a Hummer. That’s a tractor. That’s a spaceship. That’s a boat. These are the specs, now I want you to drive.’”

Industry is taking note: A hotel chain in Las Vegas is in preliminary talks with Oh to see if HUBOs can assist them with either guest-facing tasks, like room service, or material handling tasks.

“If you can drive an ATV, you can drive a forklift,” Oh said. “They handle 40 million visitors a year that need to be fed, moving pallets of vegetables and crates of meat and what have you.”

Keep the Human Touch

True to its name, the Florida Institute of Human-Machine Cognition, which took second place at the Challenge, kept humans in the loop.

As part of the Challenge, DARPA artificially limited communication from the human operators to the robots, so full teleoperation was nearly impossible. As a result, many teams decided to go as fully autonomous as possible, research scientist Matt Johnson said.

“You’ll notice some of the teams missed the target” in the drilling challenge, which required the robot to use a drill to cut a marked circle out of a wall, Johnson said. “We were told what drill we were using and what the target would look like, so you’d ask, how on earth would you miss? This is my guess: they had an autonomous algorithm. They had the ‘cut the wall’ button. If that doesn’t work, then what do you do? In our team, the operator isn’t in charge of just saying cut the wall, but you…can set the pattern, the size, the depth. It works more reliably when you can look at the situation and say, ‘I can tell the robot is off a little bit, let me adjust it.’”

Perhaps because of this, IHMC never missed on the drill task.

User experience for the robot operator was also a key focus. “It’s not just giving the operator an interface and pumping data [through it],” Johnson explained. “It’s about filtering that data so the human understands what’s going on. It’s not enough to see joint positioning data; I need to understand that currently I’m pushing myself over.”

Johnson added that there’s still no substitute for human judgment.

“Humans are…good at intuition, which machines have not demonstrated.”

Robots Need Proprioception Too

In humans, proprioception is the sense of where your body is; the skill that allows people to maintain their balance or pass a sobriety test like touching finger to nose.

In the Warner robot, fielded by a team of researchers from Worcester Polytechnic Institute and Carnegie Mellon University, it’s a layer of software designed to enhance robotic balance. In addition to putting extra work into the low-level controllers that planned Warner’s steps, calculated its position and balance, and more, the WPI-CMU team added an overseeing controller that always checked the robot’s stability, center of mass, and the pressure on its feet. If the system became unstable, the robot would instantly freeze.

It worked. “One of the things we’re most proud of,” said Matt DeDonato, the technical project manager for the team, “is we never fell over or restarted through the whole thing.” In fact, WPI-CMU’s team was the only one in their track—a software-focused track where competitors were each provided a robot built by DARPA contractor Boston Dynamics as their starting point— to not fall in the finals.

The Hubo robot built by Rainbow Co. and KAIST of South Korea won the $2 million grand prize.

Looks Matter

While not strictly related to functionality, aesthetics was a key component of the entry from NASA’s Jet Propulsion Laboratory and will be important in encouraging humans to buy into using robots.

RoboSimian, which placed fifth in the competition, is a four-limbed robot that can either wheel or scuttle along the ground. Most media reports, said Brett Kennedy, the project’s principal investigator, “call it spiderlike….Kids seem not to mind that, [but] adults do.”

In fact, RoboSimian had already gone through an extensive design process where JPL researchers studied the vehicle paint schemes, uniforms, and equipment in use by emergency response teams around the world.

“All of this was geared toward ‘How do we make a robot that looks like a piece of professional equipment?’” Kennedy said. “On the one hand, JPL didn’t want ‘Terminator.’ On the other hand, you couldn’t make it Hello Kitty because it wouldn’t look professional.”

Kennedy said because many adults still react to RoboSimian’s spiderlike features, JPL didn’t fully succeed in its product design. “As an engineer I need to solve this problem. It doesn’t have the right number of body segments, it doesn’t have the right number of limbs [to be a spider] but the human brain does its pattern recognition thing and says ‘spider.’”

The robot is of course named RoboSimian, and Kennedy said JPL actually considered covering it in fur. “We…rejected that as being counterproductive in terms of ‘not scary.’”

JPL is continuing to work on the problem as it also works on determining if RoboSimian, or a robot descended from RoboSimian, can function in space. As the hardware design is totally modular (JPL already demonstrated this by using spare RoboSimian parts to design a totally new robot called Surrogate), a spacefaring RoboSimian could sport extra limbs or different hardware. Which might make the robot look even more spiderlike.

Luckily some lessons are for Earthsiders only.

“People in space care a lot less about how things look,” Kennedy said.