At Tesla Investor Day 2023 yesterday, a portion of which was live-streamed from Gigafactory Texas, investors heard about the company’s ambitious Master Plan 3 to create a sustainable energy future. They were able to see the company’s most advanced production line and hear about long-term expansion plans, the latest on energy and batteries, the upcoming generation 3 platform, and other subjects presented by many of the top leaders of the company.

While focused mostly on sustainability, the event touched on the current state of autonomy and plans for a future robotaxi fleet. CEO Elon Musk has big expectations for the value that robotaxi functionality could add to the existing and future Tesla fleet.

“This autonomy question is very big because you could potentially have…five times the utility of the asset that you currently have,” he said. “A passenger car [gets] 10 or 12 hours a week of usage plus a lot of parking expenses. An autonomous car could be 50 to 60 hours a week or something like that, and you could get rid of a lot of parking expenses. If this is true, then as autonomy is effectively turned on for [Tesla’s] fleet, it probably will be the biggest asset value increase in history overnight.”

The state of Autopilot and FSD

Ashok Elluswamy, Director of Autopilot Software at Tesla, emphasized how self-driving is a critical part of Tesla’s plan for a sustainable energy future—the theme of the investor event.

“Currently when the car is not being used, it is sitting idly in parking lots, not doing anything,” he said. “But when autonomy is truly unlocked, this car instead of being idle can go serve other customers. This, fundamentally, reduces the need to scale manufacturing to extreme levels because each car is being used way more.”

The company is relying on the learnings from its Autopilot and especially FSD (Full Self Driving) platform developments to enable its future robotaxi plans. It finally shipped its FSD data software to nearly everyone that has bought it in U.S. and Canada, according to Elluswamy.

“This amounts to roughly 400,000 customers who can turn it on anywhere and then the car would attempt to drive to the destination,” said Elluswamy. “It’s still supervised, but it can already handle turns, stop at traffic lights, yield with other objects, and generally get to the destination. We observe that people who use FSD are already five to six times safer than the U.S. national average.”

However, building a scalable, self-driving system is one of the hardest real-world AI (artificial intelligence) problems, said Elluswamy—and he should know. He’s been working on “the problem” at Tesla for nearly a decade.

He says Tesla has made significant strides in solving the problem, assembling a world-class team to execute on self-driving goals.

“We are pushing the frontier on these three items, and as we improve the safety and the reliability and the comfort of our system, we can then unlock driverless operations, which then makes the car be used way more than what it’s used right now,” said Elluswamy.

Company engineers have focused their efforts on three main areas to build a scalable solution—the architecture of the AI system, data, and “compute.”

AI architecture

Tesla is basing its AI mission on learning neural networks to help build vision and planning systems.

In the early days, he explains that the company used a single camera and single frame neural networks that produced outputs that were stitched together in some post-processing steps for the planner. But that approach “was very brittle” and not very successful.

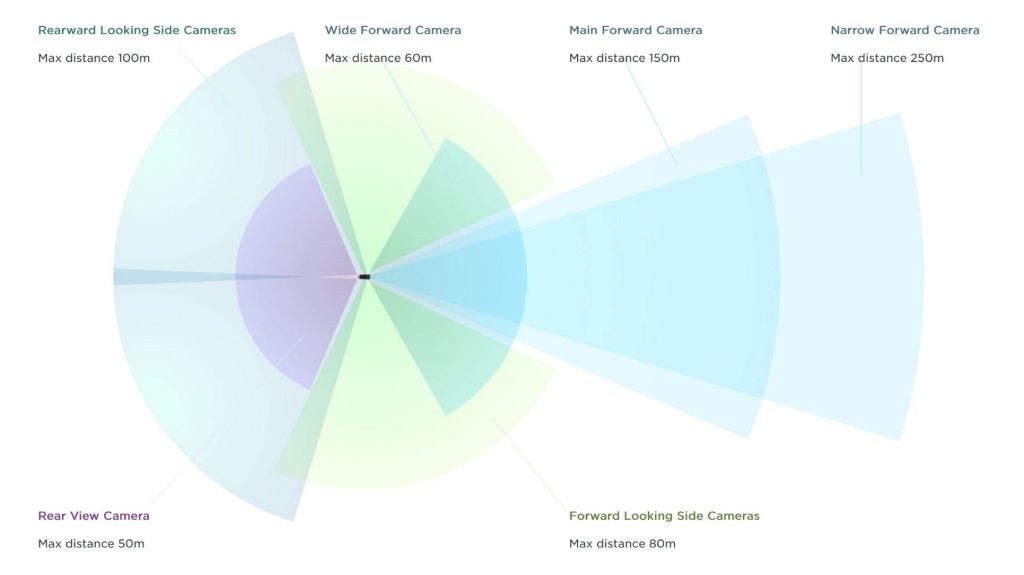

The Tesla team quickly transitioned most of its autonomy stack to a multi-camera video neural network scheme. The neural networks take in the live feed from each car in real-time using eight cameras to produce a single unified 3D output space. The system performs many tasks related to the presence of obstacles, and their motion, as well as processing data on such road features as lanes, roads, and traffic lights.

Some of the tasks such as lane connectivity are more complicated to model using just computer-vision methods, so the team uses other techniques such as language modeling reinforcement learning to model the task. These networks use similar techniques such as transformers, attention modules, and modeling of tokens as used on other AI models like ChatGPT.

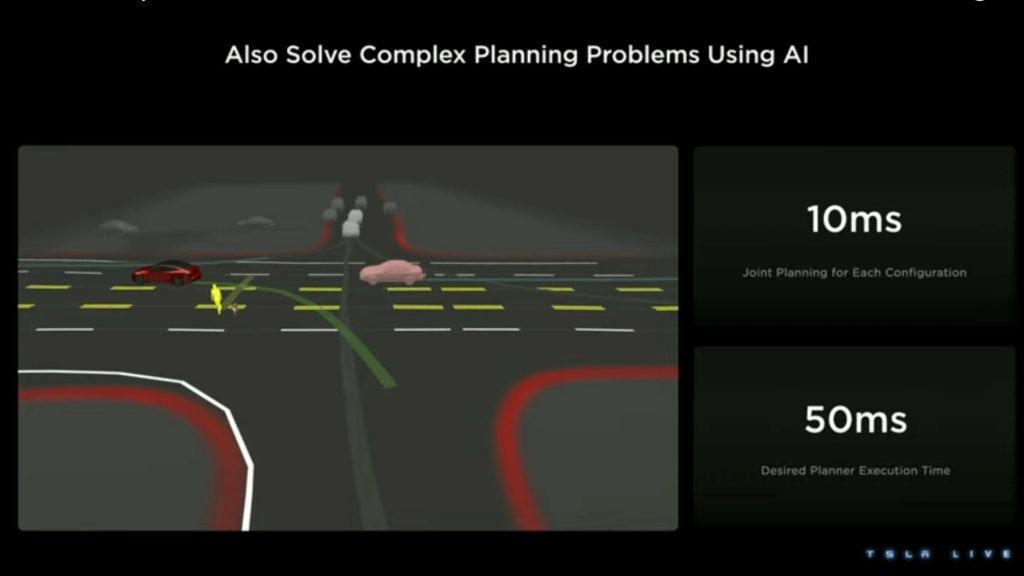

With its end-to-end system of solving the perception challenges, the team has removed the brittle post-processing steps and produced higher-quality output for the planning system, which is also being updated to use more AI. The resulting neural-network-based planners are needed especially in complicated urban planning situations with a higher number of interacting objects on the road. One example he demonstrated was an intersection where a Tesla is turning left while yielding to cars and pedestrians crossing the road.

“We have to do this both safely and smoothly while respecting everyone’s right of way and preferences,” said Elluswamy. “If this was done [traditionally], each configuration would take 10 milliseconds of compute, and there are easily thousands of configurations. This would not be feasible using traditional compute. By using AI, we have packaged all of this into a 50-millisecond compute budget so it can run in real-time.”

Data advantage

Data, the second big piece of the puzzle, is where Elluswamy believes Tesla has a unique advantage because it can tap into its fast-growing fleet to access the exact data needed to address the challenges. However, raw data is not sufficient. For training, labeled data is needed, but the traditional method using human labelers would not be sufficient to train the large, multi-camera video modules.

“We need lots and lots of data to train these networks,” he said. “Hence, we have built a sophisticated auto-labeling pipeline that collects data from the fleet, runs computational algorithms in our data center, and then produces the labels to train these networks.”

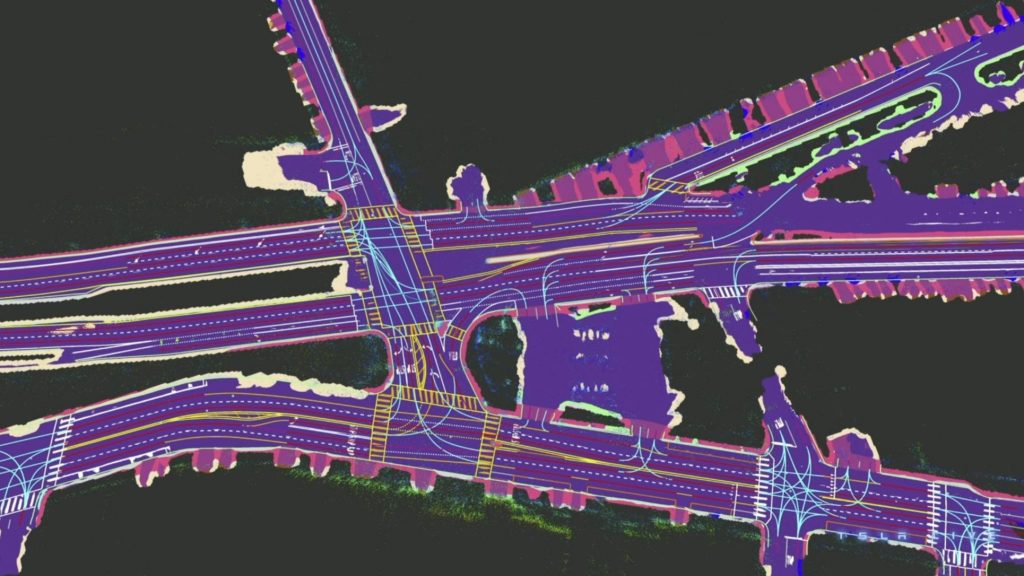

He demonstrated this with an impressive 3D reconstruction using various collected clips from cars in the fleet and assembling all of them into a single unified representation of the world around the car.

“You can see all the lanes, the road boundaries, curb, crosswalks, even the text on the road being accurately reconstructed by these algorithms,” he boasted.

On that base reconstruction, Tesla engineers can build various simulations on top of it to produce an infinite variety of data to train for all the corner cases, he says. “We have a capable simulator that can synthesize adverse real weather, lighting conditions, and even the motion of other objects to test all the corner cases that might even be rare in the real world.”

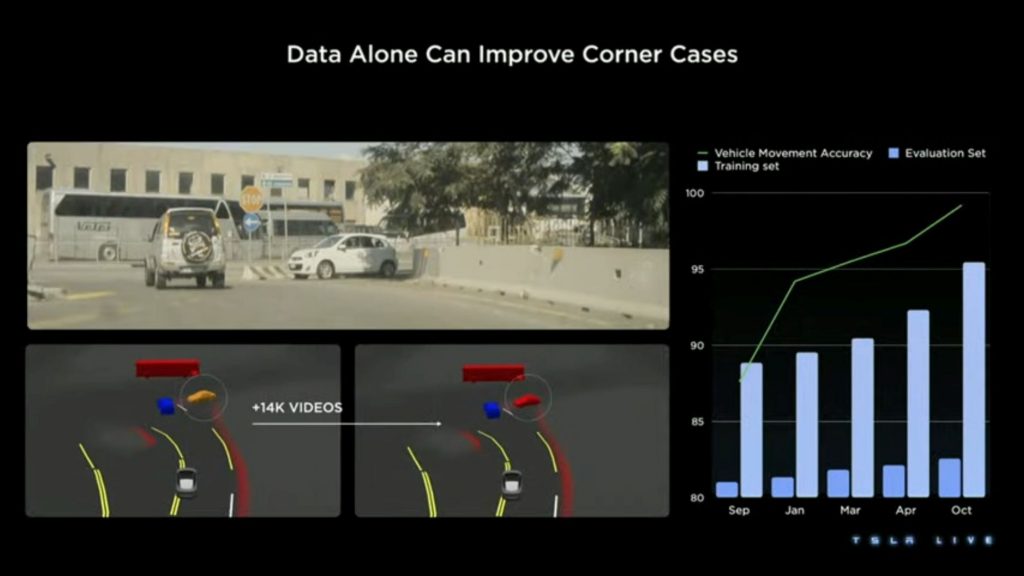

A real-world example of how the team solved a problem using the data approach involved cases of false braking for parked cars on the side of the road. It mined the Tesla fleet for similar use cases.

“We added 14,000 videos to our training set,” said Elluswamy. “Once we trained the networks again with this new data, it now understands that [there] is no driver in this car, it must be parked, so then there is no need to brake. Every time we add data, the performance improves, and then we can do this for every kind of task that we have in our system.”

This “data engine” concept is being increasingly used after identifying other challenging cases.

“We mine the fleet for such data, put it through our auto labeling system and produce the labels, add it to the training set, and once you have the newly trained models, we deploy it onto the fleet,” he summarized. “If we rinse and repeat this process, everything gets better and better.”

Big compute requirements

The final critical piece of Tesla’s autonomy efforts is state-of-the-art computational power and speed.

The capability is needed to train the large models in a reasonable amount of time and produce labels automatically. That applies not only to compute in the company’s data centers but also in its cars.

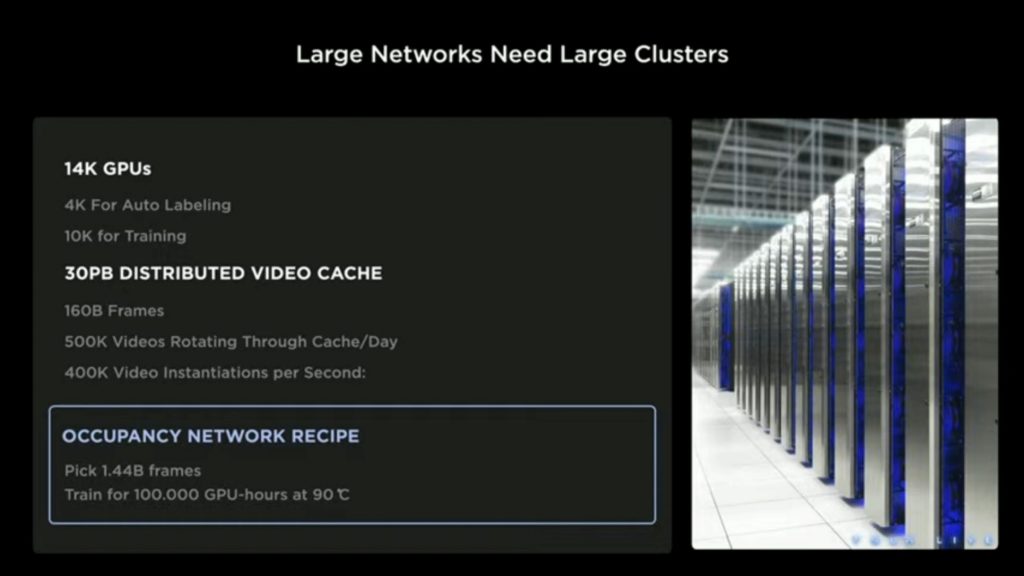

“On the back end, we have a 14,000-GPU cluster, and roughly 30% is used for auto labeling and the remaining 70% is used for training,” said Elluswamy. “We also have 30 petabytes of video cache, and this is growing to 200 petabytes.”

Other impressive numbers include 500,000 videos rotating through cache per day and 400,000 video instantiations per second.

He expects this to significantly increase once Tesla’s Dojo training supercomputer platform comes online.

Preparing for the robotaxi fleet

Looking ahead, company engineers have been focusing on supporting what the Autopilot and AI teams are doing to make the car drive itself and support a future of autonomous Tesla fleets. The related efforts involve “synchronization, permissions management, security, and privacy,” said David Lau, VP of Software Engineering, at Tesla.

“We’ve also been thinking for years about all of the other pieces that we are going to need to manage a network of autonomous vehicles,” he said. “A lot of this has been happening behind the scenes in the form of platform-level functionality that we will leverage later, but you’ve seen some of it surface already in terms of features that our customers and our internal operations teams can benefit from.”

For instance, in 2021 Tesla built on top of its mobile app phone key enabling customers to share their car with anyone by sending an invitation in a text message or email. Last year, the company introduced profile synchronization, which synchronizes seat, steering, and mirror positions as well as settings, media favorites, and stored navigation locations across all vehicles in a customer’s account.

Tesla has developed an app for internal use that allows its engineering, manufacturing, delivery, and logistics staff to view, locate, and drive all Tesla-owned vehicles at their sites. At some service locations, customers can automatically add a Tesla-owned loaner vehicle to their mobile app accounts so that, with cloud-synchronized profiles and phone keys, it’s a completely seamless service experience.

“As soon as the customer walks up to that loaner, it behaves and feels exactly like their own car,” he said. “All this is built on top of end-to-end encryption and cryptographically signed commands so that customer data remains private and obscure, and the fleet only trusts commands from authorized parties.”