Robotic eyes that visibly resemble those of humans may help make autonomous vehicles safer for pedestrians, according to a new study.

Although research into autonomous vehicles is currently largely focused on creating machines that can navigate the world safely and efficiently by themselves, the new study analyzed more human concerns of self-driving technology.

“There is not enough investigation into the interaction between self-driving cars and the people around them, such as pedestrians,” study senior author Takeo Igarashi, a professor at the University of Tokyo, said in a statement. “We need more investigation and effort into such interaction to bring safety and assurance to society regarding self-driving cars.”

One key difference between regular and autonomous vehicles is that any people within self-driving machines may not be paying full attention to the road, or there may be nobody at the wheel at all. This makes it difficult for pedestrians to gauge whether a vehicle has registered their presence or not, as there might be no eye contact or other cues from the people inside it.

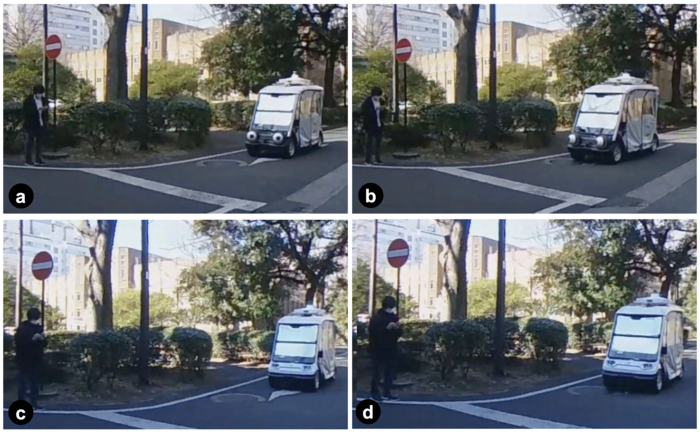

How then can pedestrians be made aware of when an autonomous vehicle has noticed them and is intending to stop? In experiments, scientists installed two large remote-controlled robotic eyes onto a self-driving golf cart from Japanese intelligent vehicle startup Tier IV.

The machine essentially resembled characters from the Pixar movie Cars. The researchers called it the “gazing car.”

The scientists tested four scenarios, two where the cart had eyes and two without. They wanted to see whether putting moving eyes on the cart would affect people’s more risky behavior—in this case, whether people would still cross the road in front of a moving vehicle when in a hurry.

In the experiments, the cart either noticed a pedestrian and was intending to stop, or had not noticed them and was going to keep driving. When the cart had eyes, the eyes would either be looking towards the pedestrian (going to stop) or looking away (not going to stop).

Although this experiment had a hidden driver, it would obviously be dangerous to ask volunteers to choose whether or not to walk in front of a moving vehicle in real life. As such, the scientists recorded the scenarios using 360-degree video cameras and the 18 participants (nine women and nine men, aged 18 to 49 years, all Japanese) played through the experiment in virtual reality.

The volunteers experienced the scenarios multiple times in random order and were given three seconds each time to decide whether or not they would cross the road in front of the cart. The researchers recorded their choices and measured the error rates of their decisions — that is, how often they chose to stop when they could have crossed and how often they crossed when they should have waited.

“The results suggested a clear difference between genders, which was very surprising and unexpected,” study lead author Chia-Ming Chang at the University of Tokyo said in a statement. “While other factors like age and background might have also influenced the participants’ reactions, we believe this is an important point, as it shows that different road users may have different behaviors and needs, that require different communication ways in our future self-driving world.”

In this study, the male volunteers made many dangerous road-crossing decisions, such as choosing to cross when the car was not stopping, but the cart’s eye gaze reduced these errors. However, eye gaze made little difference in safe situations for them — that is, choosing to cross when the car was going to stop.

On the other hand, the female participants made more inefficient decisions — that is, choosing not to cross when the car was intending to stop — and the cart’s eye gaze reduced these errors. However, there was not much difference in unsafe situations for them.

All in all, the experiments showed that the eyes resulted in a smoother or safer crossing for everyone. But how did the eyes make the participants feel?

Some volunteers thought the eyes were cute. Others saw them as creepy or scary. For many male participants, when the eyes were looking away, they reported feeling that the situation was more dangerous. For female participants, when the eyes looked at them, many said they felt safer.

“We focused on the movement of the eyes, but did not pay too much attention to their visual design in this particular study. We just built the simplest one to minimize the cost of design and construction because of budget constraints,” Igarashi said in a statement. “In the future, it would be better to have a professional product designer find the best design, but it would probably still be difficult to satisfy everybody. I personally like it. It is kind of cute.”

The researchers cautioned this study was limited by a small number of participants playing out just one scenario. It was also possible that people might make different choices in virtual reality compared to real life.

All in all, “moving from manual driving to auto driving is a huge change,” Igarashi said in a statement. “If eyes can actually contribute to safety and reduce traffic accidents, we should seriously consider adding them.”

In the future, “we would like to develop automatic control of the robotic eyes connected to the self-driving AI instead of being manually controlled, which could accommodate different situations,” Igarashi said in a statement. “I hope this research encourages other groups to try similar ideas — anything that facilitates better interaction between self-driving cars and pedestrians, which ultimately saves people’s lives.”

The scientists detailed their findings Sept. 17 at the International Conference on Automotive User Interfaces and Interactive Vehicular Applications in Seoul.