Radar sensing is seen as a key enabler for advanced driver assistance system (ADAS) features like adaptive cruise control, automatic emergency braking, and blind-spot detection. According to IDTechEx research revealed in a new report called “Automotive Radar 2024-2044: Forecasts, Technologies, Applications,” an average of 70% of new cars shipped in 2022 had a front-facing radar while 30% had side radars.

However, with ADAS systems becoming more sophisticated, and SAE Level 3 autonomous systems entering the market for the first time, radar technology needs to improve to meet the new performance demands these systems require, according to the report’s author, Dr. James Jeffs, Senior Technology Analyst at IDTechEx.

One of those technologies is 4D imaging radar. Most radars have been limited to the “three dimensions” of azimuth (horizontal angle), distance, and velocity, but 4D radar adds the ability to determine elevation. The addition of vertical resolution means that radar could be able to separate a stopped vehicle at ground level from the tunnel a few meters above.

“However, if the vertical resolution is poor to the extent that the tunnel and car are still present in the same ‘pixel,’ then the situation has not been improved,” explained Jeffs. “This is where the distinction between 4D radar and 4D imaging radar comes into play. The imaging radar should have sufficient angular resolution that it can distinguish the tunnel and vehicle even at very long distances.”

In fact, IDTechEx thinks that an imaging radar should have sufficient resolution to distinguish much smaller obstacles at long distances—for instance, a person on the road at 100 m.

“Assuming that the person is 5-6 ft tall, a resolution of around 1˚ would be needed to separate the person from the road,” said Jeffs.

Being able to detect people is one thing, however correctly classifying them as a person with the radar data alone is another. This is why radar is usually supported with front-facing cameras for applications like automatic emergency braking.

“At night-time, in foggy conditions, or in heavy rain, the camera might not be able to see either,” said Jeffs. “In these situations, there are a few options: add short or long-wave infrared detection to the vehicle, providing camera-like resolution with robustness to poor visibility conditions; add lidar to the vehicle, with radar-like ranging abilities but at a large cost; or improve the resolution of radar further.”

Radar has a natural physical limit to its resolving performance known as the Rayleigh Criterion, which is proportional to the inverse of frequency multiplied by aperture size. In short, a normal automotive radar operating at 77 GHz, and with an antenna array 10 cm wide, should be able to reach a resolution of 2.8°. For context, a typical human eye can resolve at around 0.005-0.01°, enough to see a 1-cm object at 100 m.

To improve radar resolution, its operating frequency could be increased. However, the frequency of radar is limited by regulations and is not something that is easily changed. The other option is to increase radar aperture size, but doing so runs into practical challenges. To get from a 2.8° to 1° resolution, the aperture needs to increase from 10 to 28 cm.

“To get this resolution in both azimuth and elevation, the radar is now 28 cm x 28 cm, which will be challenging to integrate into the front bumper,” said Jeffs. “It will likely cause airflow issues to the radiator, could be difficult to protect from damage, and will cause the OEM’s aesthetics teams a bit of a headache.”

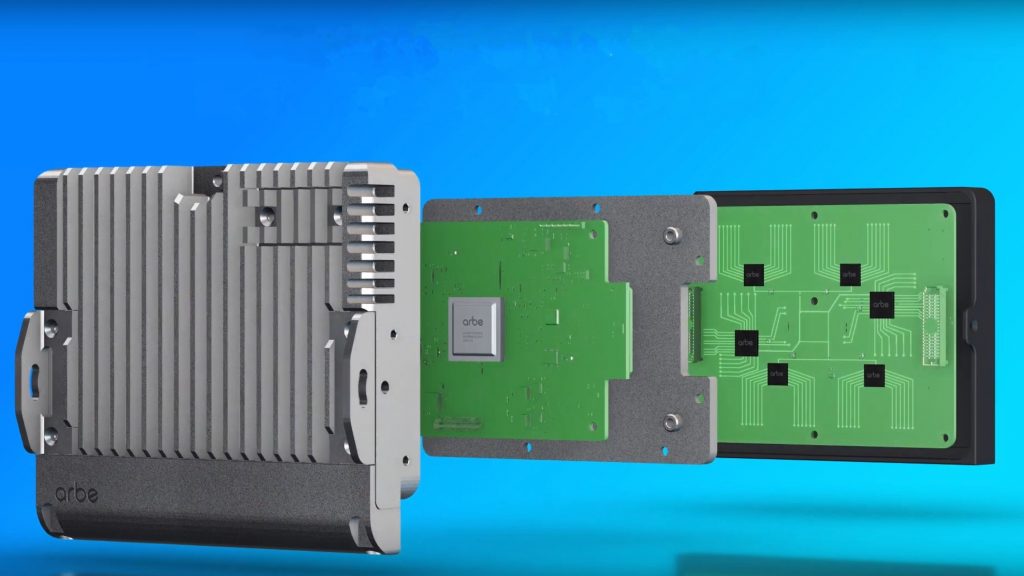

IDTechEx sees radars getting larger, with examples like Continental‘s ARS 540, Bosch‘s FR5+, and Arbe‘s Phoenix all exceeding 10 cm, but the largest of these, the Phoenix, is still only 12.7 x 14.3 cm.

To keep size down, industry innovators are looking to create virtual channels.

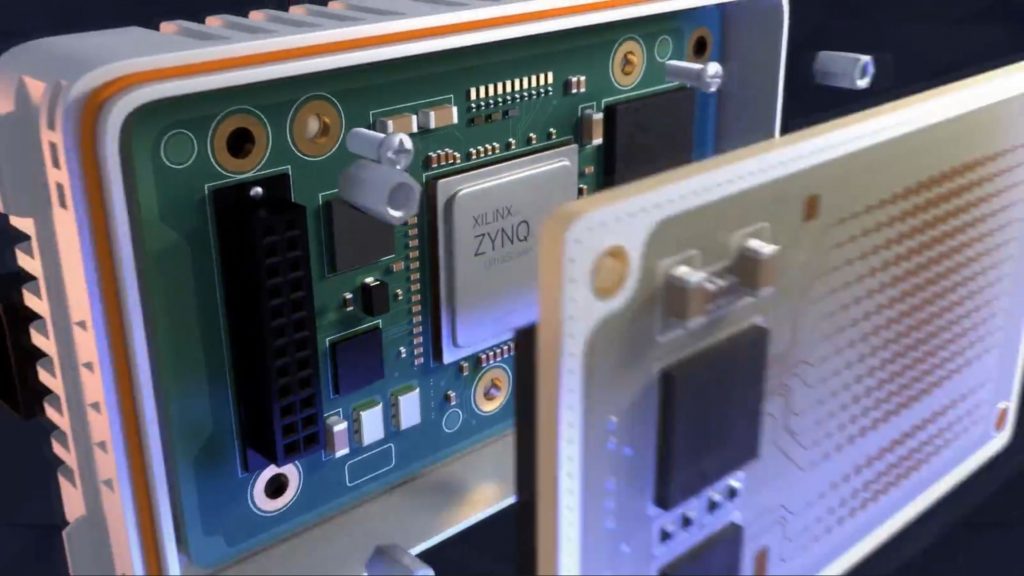

In the past, a 3D radar might have had one transmitting channel and three receiving channels, while some leading radars combine four chips to get a 12 transmitting/16 receiving arrangement for 192 virtual channels. Arbe has developed a chipset that scales to 48 transmitting and 48 receiving channels in a single radar giving 2304 virtual channels, helping to achieve 1° resolution in azimuth and 1.7° in elevation.

Another way to overcome the challenges around building one large radar is to distribute its function into smaller units.

One example IDTechEx points to is from Zendar, which involves using two lower-performance radars placed on opposite ends of a bumper but working together to increase the aperture size from less than 10 cm to effectively 1.5-2.0 m. The resolution of the two radars working together is just over 0.1° in the azimuth.

Another approach is to build separate antenna boards for each channel and place them across the bumper. This is the development route being explored by Plastic Omnium and Greenerwave.

Software is another key aspect of the discussion, and nearly all the leading companies use some kind of “super-resolution” software to improve their performance, according to Jeffs. In addition, some startups are developing exemplary algorithms for improving the resolution of radar without making any physical changes.

Zadar Labs uses technologies like machine learning, AI, and encoded transmission signals to improve radar performance. Spartan uses an algorithm based on research for F-18 and F-35 fighter-jet applications.

Super-resolution software can improve angular resolution by a factor of 4, taking a standard 2.8° angular resolution radar down to 0.5-1° and lower if it is already employing some of the other techniques discussed here.