Although people may actually unconsciously trust AI to make ethical decisions when driving autonomous vehicles, what opinions they express on the issue may depend strongly on the society in which they live, a study finds.

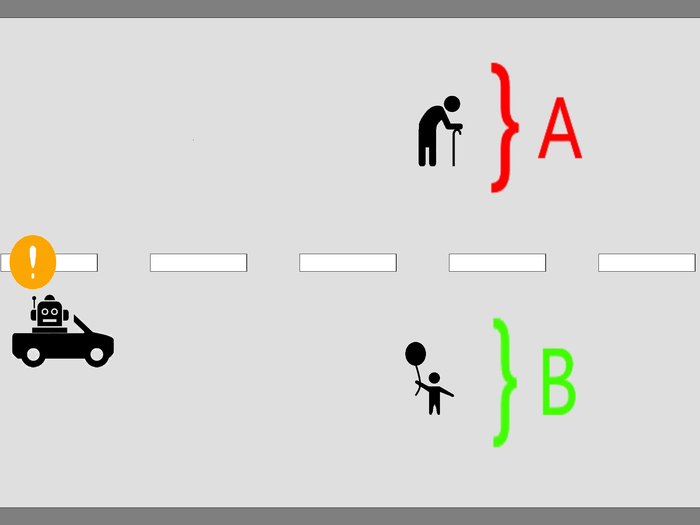

To see how ready societies may be for AIs to make ethical decisions, such as those involved in driving autonomous cars, scientists in Japan conducted two experiments. In the first, the researchers presented 529 people with an ethical dilemma a driver might face — an unavoidable collision where the driver had to crash the car into one group of people or another. The decision would cause severe harm to one group but save the lives of the other. The experiment participants had to rate the decision when the driver was a human and also when the driver was an AI. This experiment was designed to measure the bias that people might have against AI decision-making.

In the second experiment, the researchers presented 563 people with two scenarios. One involved a hypothetical government that had already decided to allow autonomous cars to make ethical decisions. Their other scenario allowed the participants to vote whether to allow the autonomous cars to make ethical decisions. In both cases, the subjects could choose to be in favor of or against the decisions made by the technology. This experiment was designed to examine how people reacted to the debate over AI ethical decisions once they become part of social and political discussions, and to see how they reacted to two different ways of introducing AI into society.

The scientists found that people did not have a significant preference for either the human or AI driver when asked to judge their ethical decisions. However, when people were asked their explicit opinion, they had a stronger opinion against the AI,.

The researchers suggested the discrepancy between these results arose from two different elements. First, they suggested that people believe society as a whole does not want ethical decision-making from AIs, and make this opinion known when asked.

“Indeed, when participants are asked explicitly to separate their answers from those of society, the difference between the permissibility for AI and human drivers vanishes,” study lead author Johann Caro-Burnett, an assistant professor in the Graduate School of Humanities and Social Sciences at Hiroshima University, said in a statement.

Second, when this new technology is introduced, allowing discussion regarding the topic has mixed results depending on the country.

“In regions where people trust their government and have strong political institutions, information and decision-making power improve how subjects evaluate the ethical decisions of AI,” Caro-Burnett said in the statement. “In contrast, in regions where people do not trust their government and have weak political institutions, decision-making capability deteriorates how subjects evaluate the ethical decisions of AI.”

All in all, fear of AI ethical decision-making may not be intrinsic to individuals. Instead, “this rejection of AI comes from what individuals believe is the society’s opinion,” study senior author Shinji Kaneko, a professor in the Graduate School of Humanities and Social Sciences at Hiroshima University, said in a statement.

Similar findings will likely apply to other machines and robots, the researchers said. “Therefore, it will be important to determine how to aggregate individual preferences into one social preference,” Kaneko said in a statement. “Moreover, this task will also have to be different across countries, as our results suggest.”

The scientists detailed their findings in the Journal of Behavioral and Experimental Economics.