Based on seemingly daily technology advances, the availability of truly autonomous vehicles looks to be just a mile marker away—if important systems issues can be resolved.

Impressive advances have been made in sensor fusion to achieve exceptional navigational accuracy in real-time with cameras, radar, LiDAR and GNSS. But subject matter experts, many of whom have spent more than a decade on this engineering challenge, point to essential, and sometimes overlooked, systems and principles of autonomous operation that must be resolved before autonomous vehicles can become an integral part of our daily lives.

Chaminda Basnayake is principal engineer at Locata, an Australian positioning systems company with a subsidiary in Las Vegas, and a former senior automotive safety systems engineer with more than a decade of experience in the field. “Strictly from a technical point of view, I believe we have all the technology pieces we need to do autonomous driving in pretty much any environment. However, there are many questions around deployability [affordability to go on commercial vehicles], serviceability, scalability, human factors [both within and outside the vehicle] and environmental factors that are either not well understood or misunderstood.”

At the heart of these concerns is a focus on two specific areas—localization and numerically guaranteed system integrity—that will guide the evolution of autonomous vehicles.

POSITION CONFIDENCE

Autonomous vehicles must identify pedestrians, buildings, cars, etc., and then make decisions about movement based on that information. But the vehicles must first know where they are in space. All that starts with the fundamental principle of localization—the use of motion sensor data to determine a vehicle’s position over time.

Associate Professor of Mechanical Engineering at the Illinois Institute of Technology (IIT) Matthew Spenko, one of the lead researchers in the University’s Autonomous Systems Group, explained: “While robotics professionals have been dealing with localization for decades, those localization techniques may not be good enough once there are large numbers of ‘robots’ in life-critical situations. We need to develop analytical methods that prove it.”

Autonomous vehicle sensors can be divided into two categories: perception or relative sensors (e.g., radar, camera, LiDAR) and absolute sensors (e.g., maps, GNSS, IMU). The right perception and processing system must be tuned to the specific software used to meet the stringent performance, safety, cost, scale and reliability requirements of this space. “While there’s been some debate about whether absolute or relative sensors are better, our perspective is that for autonomous vehicles they will complement each other once all absolute sensor types are available,” said Curtis Hay, a technical fellow at General Motors focused on developing precise GNSS, V2X and map technology for autonomous and automated vehicles. “The autonomous vehicles of the future will have to have a fusion of sensors that take advantage of absolute measurements blended with relative sensors combined with artificial intelligence [AI]. It must know when to rely on perception or absolute sensors depending on the environment.”

An enabling challenge in work today is the adoption of lower-cost, more precise GNSS receivers and better higher-resolution, higher-frame-rate cameras. That’s happening, but manufacturers and developers must be able to assure positioning with a high degree of confidence.

THE PROBABILITY OF SUCCESS

The ISO 26262 functional safety standard for a vehicle’s development categorizes the severity, exposure and controllability of hazards through the ascending integrity requirements of the Automotive Safety Integrity Level (ASIL) framework: ASIL A, ASIL B, ASIL C, ASIL D. For instance, compliance with ASIL B doesn’t necessarily require sensor redundancy, but compliance with ASIL D achieves fail-safe operation for a specific automated vehicle function.

GM’s Hay: “There’s a lot of analysis underway to demonstrate levels of ISO 26262 compliance. If we talk about Levels 4/5 depending on GNSS for real-time decision-making and control, then adherence to the Stanford model to specify alarm thresholds is needed.”

To this end, Hexagon’s Positioning Intelligence (PI) Division is in the third year of a leading-edge research program; the global entity’s goal is to quantify and define error bounds and assure integrity in GNSS measurements that are affected by faults and anomalous conditions. The research, conducted by industry specialists at the Illinois Institute of Technology, Stanford University, and Virginia Polytechnic Institute and State University, updates and expands concepts for high-integrity carrier phase algorithms, as well as threat models and safety monitors learned from aircraft applications. Early developments and demonstrations hold tremendous promise to achieving viable integrity performance for the autonomous vehicle applications.

Hexagon PI is currently testing a prototype of a high-integrity PPP solution and aims to complete development and testing in the coming years, in time for the first generation of vehicle autonomy features designed to leverage high-integrity GNSS. Studies will continue through 2019, with the goal of delivering some of the industry’s first error bounds and numerical guarantees with assigned probabilities.

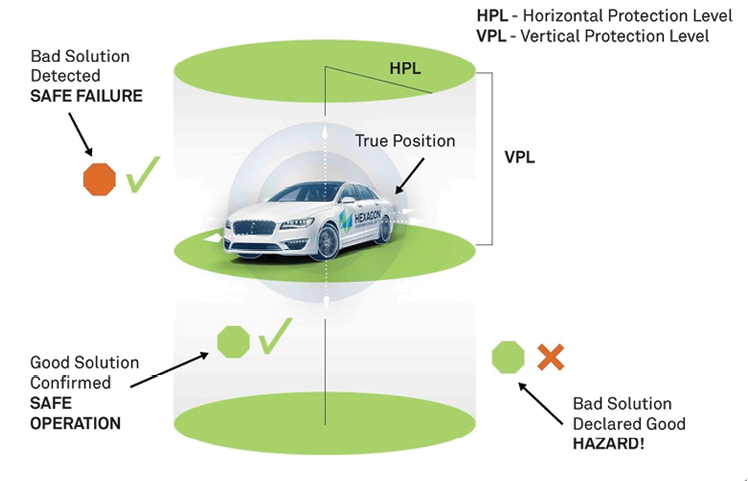

One of the lead investigators, Todd Walter, director of the Wide-Area Differential GNSS Laboratory at Stanford University, explained: “Integrity is about quantifying a position with a numerical guarantee, which is very different than reliability. Quantifying GNSS integrity ensures the values derived are accurate in nominal and fault conditions, making sure that errors are not occurring more frequently than expected and looking beyond the norm to determine probabilities of very rare events.”

Walter noted that the third component of the integrity conversation is the time to alert—the determination of how much time to correct an error before an alert is sent. For instance, in the aviation world, the time is typically measured six seconds down to two seconds.

“We spend a lot of time thinking of things that could go wrong, such as multipath, ionosphere and satellite errors,” Walter added. “Now we in the industry must assign a probability to each and make sure that protection is in place.”

SETTING OPERATIONAL BOUNDS

Further, integrity bounds need to be established for autonomous cars operating alongside human drivers.

Locata’s Basnayake outlined the issue: “Remember, even the aviation industry, which added automation decades ago, does not have commercial fully autonomous planes. In fact, takeoffs and landings are still largely manual, with highly trained pilots working within a heavily regulated space. With autonomous vehicles, we’re introducing similar capabilities into a much more complex environment with ordinary drivers or operators. Recent Boeing 737 Max 8 crashes are an unfortunate example of how this can go wrong even within a much more regulated environment.”

The tendency for drivers (or operators) to misuse or abuse autonomous features could be another major issue. Basnayake recalled the DARPA Urban Challenge of 2007, which required autonomous vehicles to travel a 96-kilometer (60-mile) urban-area course in less than six hours. The rules included obeying all traffic regulations while negotiating with other traffic and obstacles and merging into traffic.

“The vehicles completed the course averaging approximately 14 mph—and yet, one of the biggest problems encountered by the vehicle that went on to win the race was a system fault at the start,” he said. “In that instance, a large Jumbotron behind the car prevented the sensors from operating correctly because of interference. It was unexpected and certainly unplanned.”

The example illustrates the complex problem of having redundancy and determining integrity bounds around potential faults and failures. Examples of fault or failure attacks include loss of GNSS, receiving faulty sensor information, or attacking the communication channels where vehicles transmit critical information between each other and infrastructure.

Basnayake: “So in the context of positioning, for example, we have to be able to use GNSS and other positioning information and come up with fail-safe methods of operation to realize our dream of full automation.”

BEHAVIORAL ADAPTATION

Along with localization, real-time mapping is one of the essential systems that must be developed to facilitate safe operations.

“Mapping is a scalability problem,” Basnayake noted. “Currently, most autonomous fleets are geographically restricted in order to keep the mapping problem simple and manageable. We as an industry have to do more work on how that road to full autonomy will look. It could be restricted spaces [likely], or some form of connected systems, or a combination of both.”

The idea is not just to detect the presence of other objects, but to identify what an object is and how it’s likely to behave, and ultimately how that should affect our vehicle’s own on-road behavior.

There is a great deal of ongoing research and development within the auto industry to use low-cost perception sensors in retail vehicles to create high-definition autonomous vehicle maps. This vision requires large amounts of perception data to be offboarded to an AI application within the cloud, and also requires a mechanism for that crowd-sourced map to be delivered to the target vehicle. While there are technology and commercial challenges to overcome to make that vision a reality, many innovative minds around the world are working the problem.

For example, BMW is joining with Israeli technology company Mobileye, an Intel subsidiary, to provide camera-based information in the driving environment that relies on a combination of artificial intelligence and crowdsourcing to map in real-time. BMW expects to offer a mapping package for highly automated driving on motorways by 2021. Self-driving technology development company Waymo LLC is looking to automatically generate high-quality “neural nets” that enable vehicles to interpret, identify and track objects very quickly. In a recent blog post, Waymo also detailed that its vehicles use machine learning to identify and respond to emergency vehicles, or to pull off tricky driving maneuvers, such as unprotected left turns.

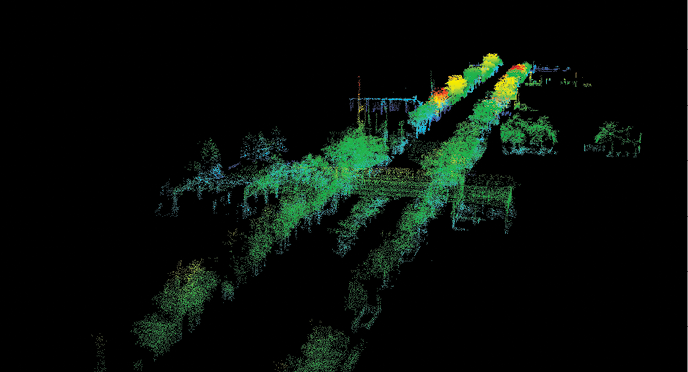

Autonomous vehicle system developers will continue to look for solutions such as the recent RoboSense award-winning RS-LiDAR-M1, which the Chinese company has produced as the world’s first MEMS-based smart LiDAR sensor for self-driving passenger vehicles. With an embedded AI algorithm technologies and System on a Chip, the RS-LiDAR-M1 is able to collect and interpret high definition 3D point cloud data and process road data in real time while synchronizing high-precision positioning output, traffic signage, lane markings, driving areas, road curbs, and obstacle detection, tracking and classification.

As systems continue to evolve, more emphasis will have to be placed on the core principles of safe autonomous driving. From an integrity perspective, a developer must know how often data-associated feature extractions have a mis-association.

“For instance, mis-associating an extracted feature from the environment, like a lamppost, with the wrong landmark on a map,” IIT’s Spenko said. “We know this will happen and that sometimes it will go undetected. So we have to evaluate the probability it will occur and the impact on the vehicle’s position and orientation. The correction might be to extract a predefined landmark from the surrounding environment and map it to provide redundancy in case of faults such as clock errors.”

VEHICLE TO ANYTHING

With systems and principles of autonomous vehicle operation, a fundamental principle will be the adoption of a common V2X standard. Said Hay: “There’s a state of paralysis in the auto industry, largely due to competing standards and the incompatibility of different radio technologies to share data between cars. No automaker will be successful on its own. We all need a common language to realize V2X as a complement to our autonomous sensors.

“If we’re going to communicate with other cars, other manufacturers need to adopt a common standard to send and receive the Basic Safety Message. How and will we overcome this paralysis? The answer will define how we cooperate [and compete] with each other down the line.”

The legacy communication link between vehicles is Dedicated Short-Range Communication (DSRC). A newer standard under development and testing is Cellular Vehicle-to-Everything (C-V2X). These two communication standards are incompatible with each other, which has fueled the need for automakers to choose a single standard.

Furthermore, spectrum allocation for these competing technologies remains unclear. In effect, the development of two standards has introduced painful delays in V2X deployment and has paralyzed the auto industry.

DSRC is a wireless communication technology designed to allow automobiles in the intelligent transportation system to communicate with other automobiles or infrastructure technology. DSRC technology operates on the 5.9 GHz band of the radio frequency spectrum and is effective over short to medium distances (no cell network). DSRC uses GNSS for vehicle localization. DSRC-based V2X infrastructure is deployed in the U.S., Europe and Japan.

C-V2X is defined by the 3rd Generation Partnership Project (3GPP) cellular modem technology specification. Contrary to common misperception, C-V2X operates independently from 4G/5G cellular networks and provides a vehicle-to-vehicle sidelink (PC5) using a dedicated 5.9 GHz radio. C-V2X deployment is expected in China in 2020.

“If there’s an opportunity to make the public safer through cooperation on standards, we should be doing that,” Hay added. “True progress cannot be made until competitors agree upon standards.”

Likely, some resolution will come from initiatives such as Colorado’s V2X Technology Safety and Mobility Improvement Project, a project partially funded by a Better Utilizing Investment to Leverage Development (BUILD) federal grant. Its focus is to create a commercial-scale connected vehicle environment using V2X technology. The approximately 537-mile network will provide real-time communication with connected vehicles and install more than 200 miles of new fiber optic lines to rural communities. Facilitators expect the network to send safety and mobility-critical messages directly to drivers through infrastructure-to-vehicle (I2V) communication, as well as to notify the Colorado Department of Transportation of crashes or hazards on the road through vehicle-to-infrastructure (V2I) communication.

AUTONOMY AND THE COMMUNITY

A final area of concern is the adaptation of autonomous vehicles to existing infrastructure. Several explorations concerning infrastructure and autonomous vehicles will help define the necessary systems and principles for successful integration in our communities.

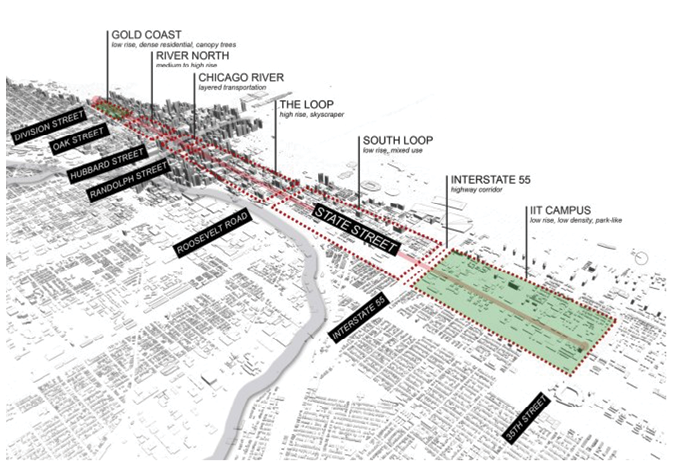

One of the most recent investigations is funded by a three-year National Science Foundation grant to investigators at the Illinois Institute of Technology. Titled “The Urban Design and Policy Implications of Ubiquitous Robots and Navigation Safety,” the project is focused on adapting existing transportation infrastructure in cities to accommodate autonomous vehicles while also addressing the safety, usability, and aesthetic needs and concerns of residents in the surrounding communities.

“Localization,” IIT’s Spenko, one of the grant’s lead researchers, said, “is an imperative for the success of autonomous vehicles—the sensors must recognize features such as bridge railings or pedestrians or bushes and trees in order to identify the robot’s location. Therefore, we must link urban landscape and navigational safety. We can fine-tune and test our navigation safety algorithms, and architects can see how their designs affect autonomous operations and safety. We are actively looking at ways to use landscape as a code to help the robots better localize themselves, especially in GNSS-denied environments.”

Specifically, the grant project brings robotic and self-driving vehicle engineers together with city planners, architects and landscape architects to influence and design urban spaces to suit this future autonomous environment.

Another project involves a $10 million BUILD federal grant for the Youngstown SMART2 Network infrastructure enterprise in Youngstown, Ohio. This project incorporates dedicated autonomous shuttle lanes and a fiber-optic conduit to facilitate high-speed broadband, which enables roadway data collection to support autonomous transit shuttles to anchor major local institutions.

As well, the Urban Core Riverfront Revitalization & Bay Street Innovation Corridor project in Jacksonville, Florida, will replace the Hart Bridge Expressway with traffic-calming measures, bicycle/pedestrian paths and an autonomous transit network supported by a V2I communications system.

From solutions to landscape and navigational safety to compatible standards, real-time localization and mapping, and, most importantly, assured, scientifically demonstrated integrity of position and navigation data, the road to autonomous vehicle operation has major hurdles to overcome. But experts and innovators are confident that answers are on the horizon.